Technologies to Execute Natural Language Processing (NLP)

Artificial Shakespeare mimics the language and form.

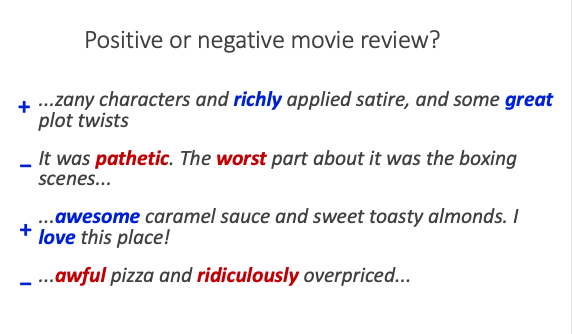

Following is a sentiment analysis using BERT, a Transformer Model which we will encounter in a future post.

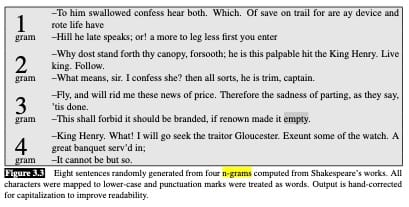

Below we use N-Grams to Generate Shakespeare. An n-gram only considers the previous n words for each word it selects.Thus a 2 gram uses the preceding 2 words to select the next word using probability. Thus A beautiful {sunset, woman, day, etc.}. Currently, ‘Transformers’ are the preferred Model for natural language processing (NLP) and vision.

The technology for natural language processing (NLP) has evolved from n-grams to LSTM (long short-term memory) to transformers.The following video traces this evolution and the mechanics of NLP. Feel free to ignore the math in last minute and one half.

I use a StoryBoard from an Alfred Hitchcock movie to demonstrate.